Marketers work under the assumption that their efforts influence user engagement. But is there evidence to support that assumption?

The answer lies in the quality of the datastream—the signal—sent to us as a measurement provider. By analyzing its quality, we can make better observations on the relationship between marketing and user engagement.

A typical signal

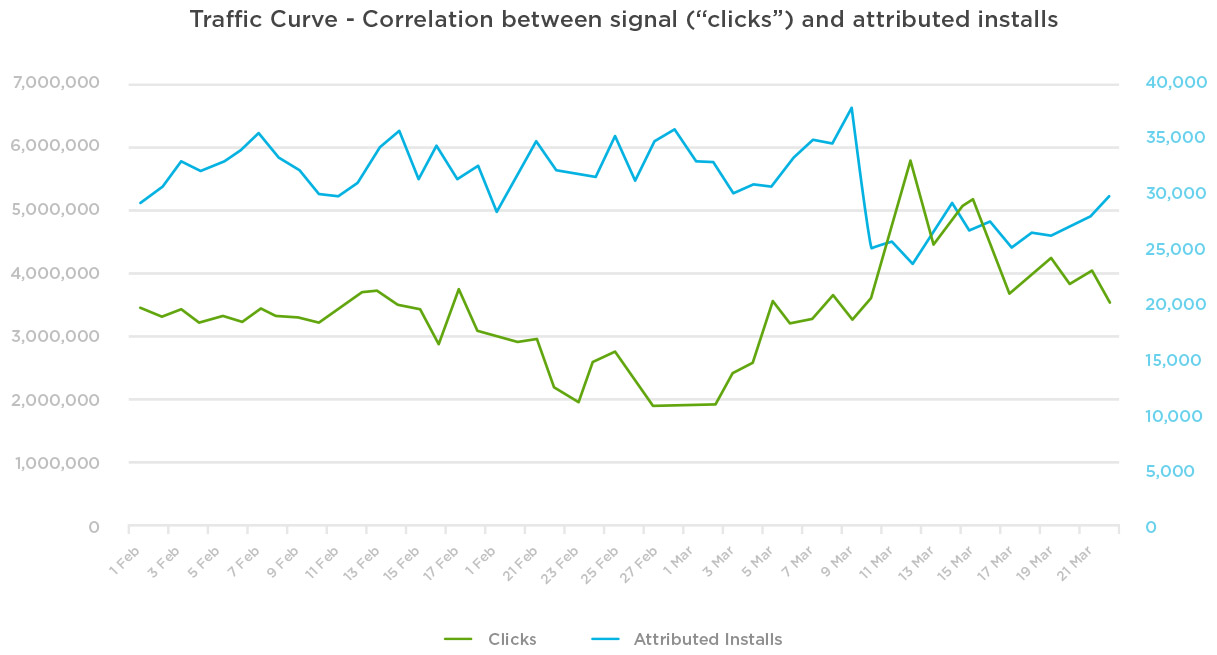

It’s reasonable to believe that there is a correlation between your marketing efforts and the effect of those efforts – meaning, your signal (clicks) should correlate with the effect (attributed installs).

We oftentimes see there is little to no correlation between the signal (clicks) and attributed installs. This makes it difficult to infer causality between paid media efforts and the attributed effect.

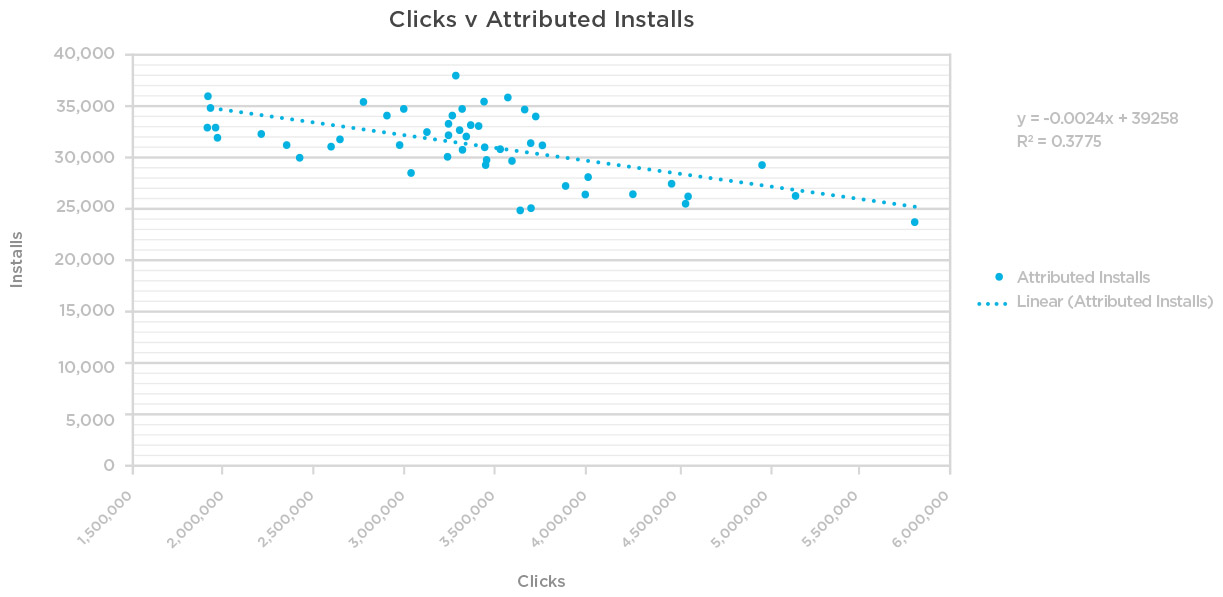

If we plot a trendline for the data, we get a correlation (R Squared) of 0.37—if the volume of clicks and installs attributed to those clicks were perfectly correlated—we’d see an R-squared of 1; and completely uncorrelated would be 0. Unfortunately, the graph above represents what we typically see: showing there is a poor but NEGATIVE correlation between clicks and attributed installs. We don’t believe that is reasonable.

This kind of discrepancy makes it difficult, or impossible, to plan media spend. We should be able to extrapolate the clicks required to obtain a certain amount of installs. With data like this, how do you budget your ad spend?

To rectify this, the data needs to be cleaned up. Attribution relies on a good signal; If you can’t trust your signal, you can’t trust your attribution. And if you can’t trust attribution, you can’t trust measurement.

With a poor signal, you’re at risk for attribution fraud as a result of click injection. Think of the industry we work in; There are many incentives for fraud because of the last-click attribution model.

A poor signal may also be reflective of an overly broad lookback window that inaccurately reflects cause-and-effect between advertisements and user engagement.

Lastly, it may be the result of media partners sending a mixed signal (impressions as clicks).

Signal clean-up

Analyze each of these areas in cleaning your attribution signal to improve your campaign results:

- Have media partners send impressions and clicks separately

- Shorten lookback windows

- Implement quality control metrics to ensure clean data

- Measure media partner quality

Take a step back from key performance indicators and look at your signal. Is there a positive relationship between your clicks and installs? Do more clicks result in more installs? Is there any relationship at all? If not, there’s work to be done, and Kochava can help.

To read more about how to interpret and clean an attribution signal, read, “Having A Poor Signal Results In Poor Measurement,” by Grant Simmons published on Medium.